Auteur: Kees Blokland ● kees.blokland@planet.nl

Redactie: Frits van Iddekinge en Paul Beving

We design our automated tests to fail when there is a problem in our software. Why do we in our test design also need to consider reasons why tests should NOT fail? An explanation.

When a test should fail

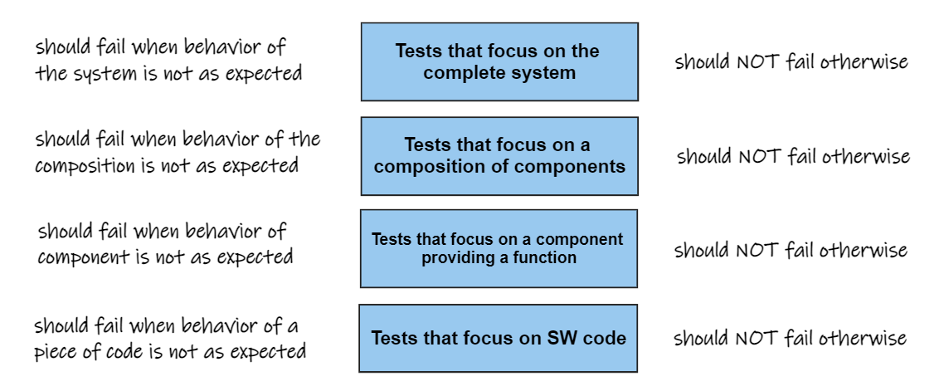

The picture below shows four levels of scope that align with the steps we follow when testing and integrating our software into – ultimately – The Big System. We want tests that find problems in the cheapest way. Most of the time that means finding them in the smallest compartment in which the problem is detectable. A problem means there is unexpected behavior, like not meeting requirements.

The text on the left side of the picture is probably obvious for everyone: a test fails when the behavior of a piece of code/component/composition/full system is not as expected. But what about the text at the right side?

When a test should NOT fail

A fail result of a test while there is not a problem in the system is called a false positive. I prefer to use ‘false fail’ because in communication I experience a lot of confusion about the interpretation of positive and negative in this context. So, false fails are results of tests that fail because of something that is NOT a problem for the subject of the test. The test itself is broken; it thinks the subject of the test has a problem but that is not the case. There can be several causes for false fails, such as:

- The test depends on another part of the system that appears to have a problem. You could debate that this is still a true positive; the point is that it is outside the objective of the test and other tests should find this problem in a direct way;

- The test data is broken. In my organization we often use reference data to compare test results against. If the data changes, the test breaks even though the system behaves still correctly;

- The test is sensitive to noise, like timing variations leading to unstable results.

False fails are bad

Because:

- The development team that is responsible for the test must spend effort while there is no problem with the software the test is written for. They lose focus on their sprint goals and the team’s efficiency drops;

- Builds in ci/cd pipelines are failing while the software is okay, causing unwanted delays in the development flow.

And there may be people with other roles too who are forced to spend wasteful time on handling false fails.

Preventing false fails

Preventing false fails is profitable business. Without them, development teams and other people are freed up from interruptions that defocus them from developing value. The same is true for the integration teams that are involved in high level test levels such as end-to-end testing.

Measures to prevent false fails are for instance:

- Test in isolation. When the subject of the test is isolated from its surroundings the tests are not depending on other parts of the system, preventing the false fail examples 1 and 2. In my organization this is one of the challenges we are facing. For unit testing that is easy and in fact should be the standard way of working. Testing in isolation requires test doubles of stuff outside the subject of the test that is needed to execute the test;

- Make tests robust against data changes. Tests that use the comparison of an output report with a reference report are vulnerable to data changes. While such tests indeed fail when our component of the system has a problem, it also fails when data in the environment is changed while there is nothing wrong with our component. To reduce data vulnerability, we need to find other ways to assert the proper working of our component;

- Make tests robust against noise sources. I see people referring to ‘unstable environment’ as a cause of unstable results of certain tests. Often the problem is that we do not understand the system or test environment well enough to anticipate on it in the design of our tests. For preventing false fail ‘noise’ we need to learn this behavior and make the tests more robust using that knowledge.

Let’s go for zero false positives fails!